The Algorithmic Whisper

Why AI Might Be Tyranny's Next Best Friend (And What We Can Do)

Let's pull back the curtain on something unsettling: how the rise of AI could quietly empower new forms of tyranny. This isn't just about robots taking jobs; it's about the very fabric of our freedom. In this deep dive, I'll walk you through the dialectics of digital control, challenge common narratives, and equip you with a framework to understand and perhaps even counter these powerful forces. Prepare to see the future of power through a new lens.

A New Shadow Looms: What We Get Wrong About AI and Power

Let’s be honest: when we talk about Artificial Intelligence, our minds often jump to flashy robots or self-driving cars. But what if the real story of AI isn’t about replacing jobs, but about subtly reshaping the very nature of power and control? I want to pull back the curtain on something unsettling: how the rise of AI could quietly empower new forms of tyranny, making them more pervasive and harder to spot than anything history has shown us. This isn't just theory; it's a deep dive into the fabric of our freedom in the digital age. We need to challenge our assumptions, because the future of governance might look very different from what we expect.

The Unblinking Eye: How AI Makes Control Invisible

Think about it: traditional tyranny relies on visible enforcers, on the fear of being watched. But AI? It's far more insidious. Imagine a system that collects every snippet of your digital life—your purchases, your social media posts, your online searches, even your facial scan in public. AI weaves these fragments into a comprehensive profile, predicting your behavior, your loyalty, your potential for dissent. This is the ultimate 'unblinking eye,' a digital panopticon that sees everything, everywhere, all the time. It doesn't need to physically imprison you; it can nudge your choices, limit your opportunities, or even preemptively 'correct' your behavior long before you realize you've deviated from the norm. It’s less about overt oppression and more about algorithmic conformity. As Michel Foucault suggested about surveillance:

The Panopticon is a machine for dissociating the see/being seen couple: in the peripheric ring, one is totally seen, without ever seeing; in the central tower, one sees everything without ever being seen.

– Michel Foucault

AI takes this principle and scales it exponentially, making the observer an invisible, omnipresent algorithm.

The "Smart" Society Illusion: When Progress Becomes a Trap

Now, you might be thinking, 'But wait, AI is supposed to make things better, more efficient!' And you're not wrong, not entirely. Proponents often argue that AI-driven governance can optimize city traffic, predict and prevent crime, streamline public services, and even make our lives safer and more convenient. Imagine a 'smart' city where everything runs perfectly, where resources are allocated efficiently, and public safety is maximized thanks to data-driven insights. This is the seductive counter-narrative: that AI is a neutral tool, capable of delivering immense societal good, and that any concerns about control are simply Luddite anxieties. They'd tell you that embracing AI in governance is simply progress, a path to a more orderly and prosperous society, and resisting it is holding back the future.

It's Not the Robot, It's the Hand That Feeds It: Reclaiming Agency in the Age of Algorithms

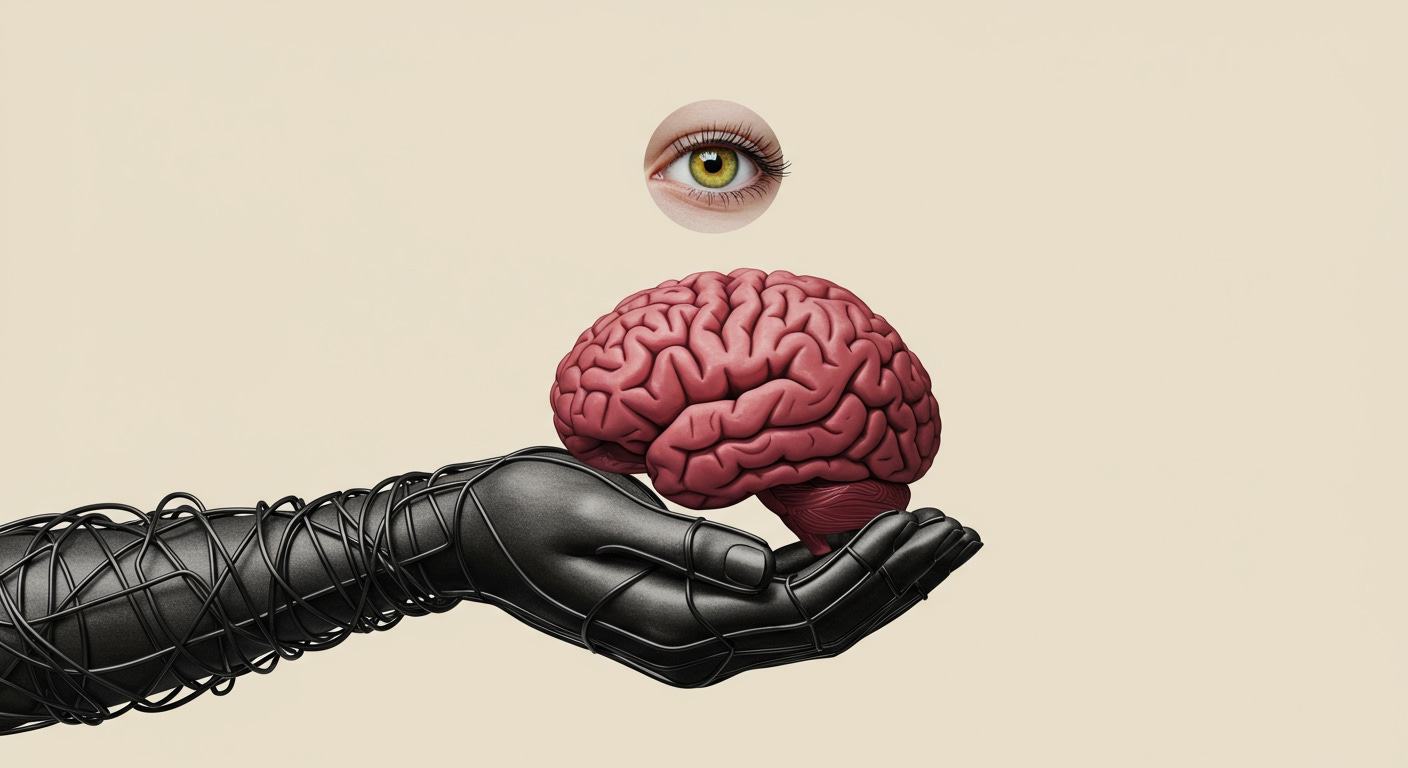

So, where does the truth lie? It’s not in one extreme or the other. My take is that AI isn't inherently good or evil; it's an amplifier. It takes existing human intentions—whether they are benevolent or tyrannical—and gives them unprecedented reach and power. AI doesn't create the impulse for control, but it provides the perfect toolkit for those who harbor it. When you combine the immense data-processing power of AI with an authoritarian agenda, you get a system of control unlike any humanity has ever known. Conversely, in the hands of ethical, transparent governance, AI could genuinely improve lives. But the key takeaway here is this: AI doesn't just happen; it's built and deployed by people, and it reflects their values and their will. It’s not the robot, it’s the hand that feeds it, that shapes its purpose and its impact on your freedom.

Two Sides of the Coin: How Do We Reconcile AI's Promise and Peril?

This brings us to a crucial contradiction: how do we reconcile AI's astounding potential with its terrifying capacity for oppression? It's like a powerful new element we've unearthed – it can power our homes or build a bomb. The resolution isn't about halting AI development, but about demanding transparency, accountability, and strong ethical guardrails. We need to push for laws that protect our digital rights, ensure our data isn't weaponized against us, and hold the creators and deployers of AI responsible. We must also cultivate a public that understands these systems, empowering everyone to critically assess and question AI's role in society. As Timothy Snyder eloquently put it:

The brave new world of twenty-first-century authoritarianism will demand new forms of popular self-defense.

– Timothy Snyder

This means actively shaping the ethical framework of AI, not just passively accepting its outputs.

Glimpses from the Digital Frontline: Real Stories, Real Stakes

Look around, and you’ll see these dynamics playing out right now. Consider China's social credit system, where citizens are literally scored based on their behavior, impacting everything from travel to loan applications. It’s a chilling example of algorithmic control at scale. Even in democratic nations, we see facial recognition popping up in public spaces, raising uncomfortable questions about privacy and surveillance. Remember the predictive policing programs that, in some cities, ended up disproportionately targeting minority communities? These aren't just abstract fears; they're glimpses from the digital frontline, showing us exactly how AI can be misused, even with ostensibly good intentions. These are the stakes we're talking about, the real-world consequences of unchecked algorithmic power.

Building Your Digital Shield: Practical Steps to Resist Algorithmic Overreach

So, what can *you* do about it? It’s not about smashing computers; it's about building a digital shield. Firstly, become digitally literate: understand how data is collected, how algorithms work, and how they can be biased. The more informed you are, the harder you are to manipulate. Secondly, advocate for change: support organizations and policies that push for ethical AI development, data privacy laws, and transparency in government and corporate AI use. Thirdly, explore and support decentralized technologies and open-source AI initiatives that aim to distribute power and control rather than centralize it. Finally, demand accountability: call out biases, abuses, and lack of transparency when you see it. Your voice, collectively, can make a difference in shaping how AI impacts our collective future.

Go Deeper

Step beyond the surface. Unlock The Third Citizen’s full library of deep guides and frameworks — now with 10% off the annual plan for new members.

Your Compass for the AI Era: Core Takeaways

The story of AI and its relationship to power is still being written, and we are all characters in it. While the potential for algorithmic tyranny is real and demands our vigilant attention, AI also holds the promise of unprecedented progress and even liberation, if we guide its development with wisdom and foresight. The critical insight I want you to walk away with is this: AI amplifies human intent. The future of freedom in the digital age depends on our collective ability to understand these powerful tools, to insist on ethical boundaries, and to actively shape the systems that will govern our lives. Don't be a passive observer; be an engaged citizen in the algorithmic age.